(tl;dr: There’s a lot going on, and I have some sage, if painful, advice for those who think Mozilla is just ruining your ability to do what you do. But this advice is worth exactly what you pay to read it. If you don’t care about a deeper discussion, just move to the next article.)

The last few weeks on Planet Mozilla have had some interesting moments: great, good, bad, and ugly. Honestly, all the recent traffic has impacts on me professionally, both present and future, so I’m going to respond very cautiously here. Please forgive the piling on – and understand that I’m not entirely opposed to the most controversial piece.

- WebAssembly. It just so happens I’m taking an assembly language course now at Chabot College. So I want to hear more about this. I don’t think anyone’s going to complain much about faster JavaScript execution… until someone finds a way to break out of the .wasm sandboxing, of course. I really want to be a part of that.

- ECMAScript 6th Edition versus the current Web: I’m looking forward to Christian Heilmann’s revised thoughts on the subject. On my pet projects, I find the new features of ECMAScript 6 gloriously fun to use, and I hate working with JS that doesn’t fully support it. (CoffeeScript, are you listening?)

- WebDriver: Professionally I have a very high interest in this. I think three of the companies I’ve worked for, including FileThis (my current employer), could benefit from participating in the development of the WebDriver spec. I need to get involved in this.

- Electrolysis: I think in general it’s a good thing. Right now when one webpage misbehaves, it can affect the whole Firefox instance that’s running.

- Scripts as modules: I love .jsm’s, and I see in relevant bugs that some consensus on ECMAScript 6-based modules is starting to really come together. Long overdue, but there’s definitely traction, and it’s worth watching.

- Pocket in Firefox: I haven’t used it, and I’m not interested. As for it being a “surprise”: I’ll come back to that in a moment.

- Rust and Servo: Congratulations on Rust reaching 1.0 – that’s a pretty big milestone. I haven’t had enough time to take a deep look at it. Ditto Servo. It must be nice having a team dedicated to researching and developing new ideas like this, without a specific business goal. I’m envious. 🙂

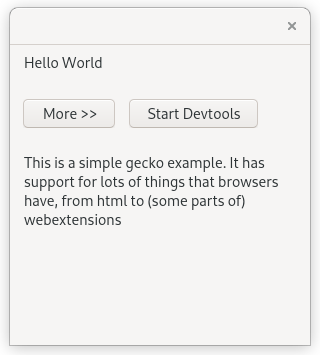

- Developer Tools: My apologies for nagging too much about one particular bug that really hurts us at FileThis, but I do understand there’s a lot of other important work to be done. If I understood how the devtools protocols worked, I could try to fix the bug myself. I wish I could have a live video chat with the right people there, or some reference OGG videos, to help out… but videos would quickly become obsolete documentation.

- WebExtensions, XPCOM and XUL: Uh oh.

First of all, I’m more focused on running custom XUL apps via firefox -app than I am on extensions to base-line Firefox. I read the announcement about this very, very carefully. I note that there was no mention of XUL applications being affected, only XUL-based add-ons. The headline said “Deprecration of XUL, XPCOM…” but the text makes it clear that this applies mostly to add-ons. So for the moment, I can live with it.

Mozilla’s staff has been sending mixed messages, though. On the one hand, we’re finally getting a Firefox-based SDK into regular production. (Sorry, guys, I really wish I could have driven that to completion.) On the other, XUL development itself is considered dead – no new features will be added to the language, as I found to my dismay when a XUL tree bug I’d been interested in was WONTFIX’ed. Ditto XBL, and possibly XPCOM itself. In other words, what I’ve specialized in for the last dozen years is becoming obsolete knowledge.

I mean, I get it: the Web has to evolve, and so do the user-agents (note I didn’t say “browsers”, deliberately) that deliver it to human beings have to evolve too. It’s a brutal Darwinian process of not just technologies, but ideas: what works, spreads – and what’s hard for average people (or developers) to work with, dies off.

But here’s the thing: Mozilla, Google, Microsoft, and Opera all have huge customer bases to serve with their browser products, and their customer bases aren’t necessarily the same as yours or mine (other developers, other businesses). In one sense we should be grateful that all these ideas are being tried out. In another, it’s really hard for third-parties like FileThis or TenFourFox or NoScript or Disruptive Innovations, who have much less resources and different business goals, to keep up with that brutally fast Darwinian pace these major companies have set for themselves. (They say it’s for their customers, and they’re probably right, but we’re coughing on the dust trails they kick up.) Switching to an “extended support release” branch only gives you a longer stability cycle… for a while, anyway, and then you’re back in catch-up mode.

A browser for the World Wide Web is a complex beast to build and maintain, and growing more so every year. That’s because in the mad scramble to provide better services for Web end-users, they add new technologies and new ideas rapidly, but they also retire “undesirable” technologies. Maybe not so rapidly – I do feel sympathy for those who complain about CSS prefixes being abused in the wild, for example – but the core products of these browser providers do eventually move on from what, in their collective opinions, just isn’t worth supporting anymore.

So what do you do if you’re building a third-party product that relies on Mozilla Firefox supporting something that’s fallen out of favor?

Well, obviously, the first thing you do is complain on your weblog that gets syndicated to Planet Mozilla. That’s what I’m doing, isn’t it? 🙂

Ultimately, though, you have to own the code. I’m going to speak very carefully here.

In economic terms, we web developers deal with an oligopoly of web browser vendors: a very small but dominant set of players in the web browsing “market”. They spend vast resources building, maintaining and supporting their products and largely give them away for free. In theory the barriers to entry are small, especially for Webkit-based browsers and Gecko: download the source, customize it, build and deploy.

In practice… maintenance of these products is extremely difficult. If there’s a bug in NSS or the browser devtools, I’m not the best person to fix it. But I’m the Mozilla expert where I work, and usually have been.

I think it isn’t a stretch to say that web browsers, because of the sheer number of features needed to satisfy the average end-user, rapidly approach the complexity of a full-blown operating system. That’s right: Firefox is your operating system for accessing the Web. Or Chrome is. Or Opera, or Safari. It’s not just HTML, CSS and JavaScript anymore: it’s audio, video, security, debuggers, automatic updates, add-ons that are mini-programs in their own right, canvases, multithreading, just-in-time compilation, support for mobile devices, animations, et cetera. Plus the standards, which are also evolving at high frequencies.

My point in all this is as I said above: we third party developers have to own the code, even code bases far too large for us to properly own anymore. What do I mean by ownership? Some would say, “deal with it as best you can”. Some would say, “Oh yeah? Fork you!” Someone truly crazy (me) would say, “consider what it would take to build your own.”

I mean that. Really. I don’t mean “build your own.” I mean, “consider what you would require to do this independently of the big browser vendors.”

If that thought – building something that fits your needs and is complex enough to satisfy your audience of web end-users, who are accustomed to what Mozilla Firefox or Google Chrome or Microsoft Edge, etc., provide them already, complete with back-end support infrastructure to make it seamlessly work 99.999% of the time – scares you, then congratulations: you’re aware of your limited lifespan and time available to spend on such a project.

For what it’s worth, I am considering such an idea. For the future, when it comes time to build my own company around my own ideas. That idea scares the heck out of me. But I’m still thinking about it.

Just like reading this article, when it comes to building your products, you get what you pay for. Or more accurately, you only own what you’re paying for. The rest of it… that’s a side effect of the business or industry you’re working in, and you’re not in control of these external factors you subconsciously rely on.

Bottom line: browser vendors are out to serve their customer bases, which are tens of millions, if not hundreds of millions of people in size. How much of the code, of the product, that you are complaining about do you truly own? How much of it do you understand and can support on your own? The chances are, you’re relying on benevolent dictators in this oligopoly of web browsers.

It’s not a bad thing, except when their interests don’t align with yours as a developer. Then it’s merely an inconvenience… for you. How much of an inconvenience? Only you can determine that.

Then you can write a long diatribe for Planet Mozilla about how much this hurts you.